RemoteIoT batch jobs have become increasingly popular in recent years, especially with the rise of cloud computing platforms like AWS. As businesses continue to embrace IoT solutions, understanding how to execute batch jobs efficiently in AWS is crucial for optimizing performance and reducing costs. This article dives deep into the concept of RemoteIoT batch jobs and provides practical examples for implementation in AWS.

In this era of digital transformation, leveraging cloud-based technologies is essential for scaling IoT deployments. AWS offers powerful tools and services that make it easier for developers and businesses to manage complex IoT workloads, including batch processing. Whether you're processing sensor data, analyzing logs, or handling large-scale computations, AWS provides robust solutions to meet your needs.

This article aims to provide a detailed exploration of RemoteIoT batch jobs in AWS, covering everything from foundational concepts to advanced implementation strategies. By the end of this guide, you'll have a clear understanding of how to design, deploy, and optimize batch jobs in AWS, ensuring seamless integration with your IoT infrastructure.

Read also:5movierulz 2025 Telugu Download Your Ultimate Guide To Legal Streaming

Table of Contents

- Introduction to RemoteIoT Batch Jobs

- AWS Ecosystem for IoT Batch Processing

- Setting Up Your AWS Environment

- Designing RemoteIoT Batch Jobs

- RemoteIoT Batch Job Example in AWS

- Optimizing Batch Jobs for Performance

- Scaling Batch Jobs in AWS

- Ensuring Security in RemoteIoT Batch Jobs

- Cost Management for Batch Processing

- Best Practices for RemoteIoT Batch Jobs

- Conclusion and Next Steps

Introduction to RemoteIoT Batch Jobs

Batch processing plays a critical role in IoT systems, where vast amounts of data are generated continuously. RemoteIoT batch jobs in AWS are designed to handle these large-scale data processing tasks efficiently. By leveraging AWS services such as AWS Batch, AWS Lambda, and Amazon EC2, developers can automate and streamline batch processing workflows.

One of the key advantages of using AWS for RemoteIoT batch jobs is its scalability. Whether you're processing small datasets or handling massive amounts of data, AWS provides the flexibility to scale resources up or down based on demand. Additionally, AWS ensures high availability and reliability, making it an ideal platform for mission-critical IoT applications.

Why Choose AWS for RemoteIoT Batch Jobs?

AWS offers a comprehensive suite of tools and services tailored for IoT batch processing. Some of the reasons why AWS stands out include:

- Scalable infrastructure for handling large datasets

- Integration with other AWS services for seamless workflows

- Cost-effective pricing models based on usage

- Advanced security features to protect sensitive data

AWS Ecosystem for IoT Batch Processing

The AWS ecosystem provides a wide range of services that can be utilized for RemoteIoT batch jobs. Understanding how these services work together is essential for designing efficient and scalable batch processing workflows.

Key AWS services for RemoteIoT batch jobs include:

- AWS Batch: A fully managed service for running batch computing workloads in AWS.

- AWS Lambda: A serverless compute service that allows you to run code without provisioning or managing servers.

- Amazon EC2: A web service that provides resizable compute capacity in the cloud.

- AWS IoT Core: A managed cloud service that allows connected devices to securely interact with cloud applications and other devices.

How AWS Services Work Together

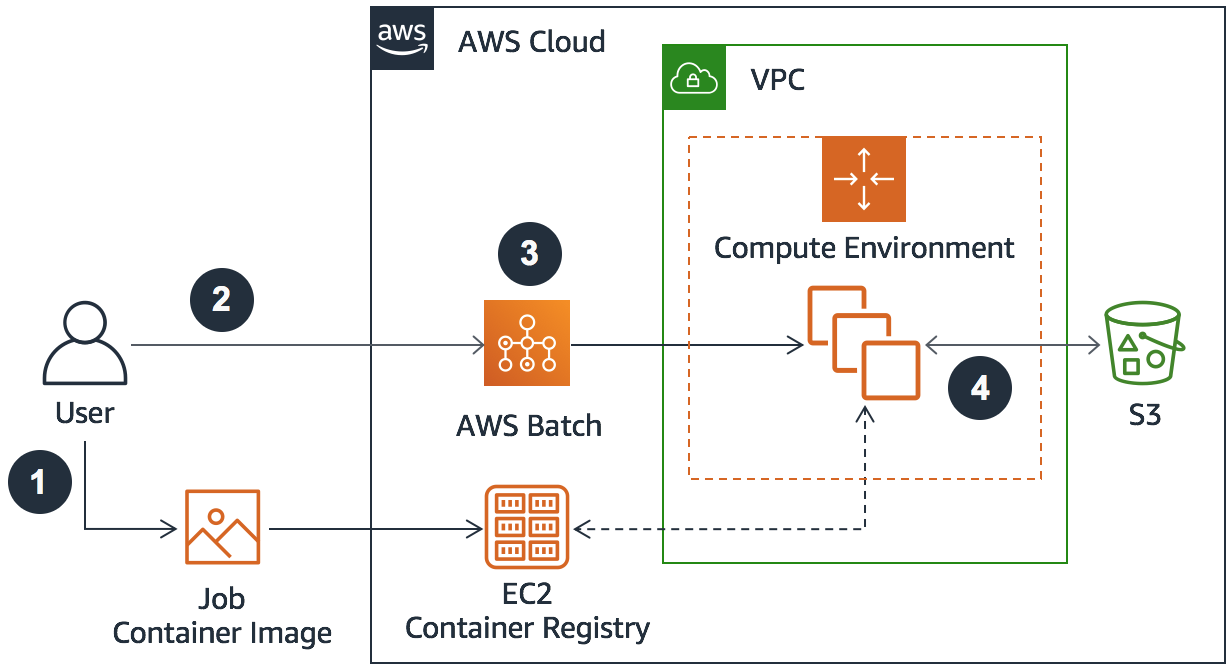

By combining these services, you can create end-to-end solutions for RemoteIoT batch jobs. For example, you can use AWS IoT Core to collect data from IoT devices, store the data in Amazon S3, and then process it using AWS Batch or AWS Lambda. This integrated approach ensures efficient data flow and processing.

Read also:5movierulz Kannada The Ultimate Guide To Kannada Movie Downloads And Streaming

Setting Up Your AWS Environment

Before diving into RemoteIoT batch job examples, it's important to set up your AWS environment properly. This involves creating an AWS account, setting up IAM roles and permissions, and configuring the necessary services.

Here are the steps to set up your AWS environment:

- Create an AWS account if you don't already have one.

- Set up IAM roles and permissions for batch processing.

- Install the AWS CLI and configure it with your credentials.

- Create an S3 bucket for storing your data.

- Set up AWS Batch or AWS Lambda for processing your batch jobs.

Best Practices for Setting Up Your Environment

To ensure a smooth setup process, follow these best practices:

- Use strong IAM policies to restrict access to sensitive resources.

- Enable logging and monitoring for all AWS services to track usage and performance.

- Test your setup thoroughly before deploying it to production.

Designing RemoteIoT Batch Jobs

Designing effective RemoteIoT batch jobs requires careful planning and consideration of various factors. This includes defining the scope of your batch job, selecting the appropriate AWS services, and optimizing your workflow for performance.

Key considerations for designing RemoteIoT batch jobs include:

- Data volume and complexity

- Processing requirements and deadlines

- Resource allocation and cost management

Steps for Designing a Batch Job

Follow these steps to design a RemoteIoT batch job:

- Identify the data sources and processing requirements.

- Select the appropriate AWS services for your workflow.

- Create a detailed plan outlining the steps involved in your batch job.

- Test your design using sample data to ensure it meets your needs.

RemoteIoT Batch Job Example in AWS

To illustrate how RemoteIoT batch jobs work in AWS, let's consider a practical example. Suppose you have an IoT system that collects temperature data from multiple sensors. You want to process this data in batches to identify patterns and anomalies.

Here's how you can implement this using AWS:

- Set up AWS IoT Core to collect data from your sensors.

- Store the data in an S3 bucket.

- Use AWS Batch or AWS Lambda to process the data in batches.

- Analyze the results and store them in a database for further use.

Code Example

Below is a sample code snippet for processing data using AWS Lambda:

python

import boto3

def lambda_handler(event, context):

s3 = boto3.client('s3')

bucket_name = 'your-bucket-name'

file_key = 'your-file-key'

response = s3.get_object(Bucket=bucket_name, Key=file_key)

data = response['Body'].read().decode('utf-8')

# Process the data here

return {'message': 'Data processed successfully'}

Optimizing Batch Jobs for Performance

Optimizing RemoteIoT batch jobs is essential for achieving the best performance and cost efficiency. This involves fine-tuning your AWS resources, optimizing your code, and leveraging advanced features provided by AWS.

Some optimization strategies include:

- Using spot instances to reduce costs for non-critical workloads.

- Implementing parallel processing to speed up data processing.

- Monitoring performance metrics and making adjustments as needed.

Tools for Optimization

AWS provides several tools to help you optimize your batch jobs, including:

- AWS CloudWatch for monitoring and logging.

- AWS Cost Explorer for analyzing and managing costs.

- AWS Auto Scaling for dynamically adjusting resources based on demand.

Scaling Batch Jobs in AWS

Scaling RemoteIoT batch jobs is crucial for handling increasing data volumes and processing requirements. AWS offers several features and services to help you scale your batch jobs effectively.

Key scaling strategies include:

- Using AWS Auto Scaling to automatically adjust resources based on workload.

- Implementing load balancing to distribute workloads across multiple instances.

- Optimizing your code and workflows for better performance and efficiency.

Best Practices for Scaling

Follow these best practices for scaling your batch jobs:

- Monitor your workloads regularly and adjust resources as needed.

- Test your scaling strategies thoroughly to ensure they work as expected.

- Document your scaling processes for future reference and improvements.

Ensuring Security in RemoteIoT Batch Jobs

Security is a critical consideration when working with RemoteIoT batch jobs in AWS. Protecting your data and ensuring the integrity of your workflows is essential for maintaining trust and compliance.

Some security best practices include:

- Using strong IAM policies and encryption for sensitive data.

- Implementing network security measures such as VPCs and security groups.

- Regularly auditing your security settings and addressing any vulnerabilities.

Security Tools in AWS

AWS provides several tools to help you enhance the security of your batch jobs, including:

- AWS Identity and Access Management (IAM) for managing access and permissions.

- AWS Key Management Service (KMS) for encrypting and managing encryption keys.

- AWS Shield for protecting against DDoS attacks.

Cost Management for Batch Processing

Managing costs effectively is crucial when working with RemoteIoT batch jobs in AWS. AWS offers several tools and strategies to help you optimize your costs without compromising performance.

Key cost management strategies include:

- Using AWS Cost Explorer to analyze and manage your costs.

- Implementing cost allocation tags to track expenses by project or department.

- Optimizing your resource usage to reduce unnecessary expenses.

Tools for Cost Management

AWS provides several tools to help you manage your costs, including:

- AWS Budgets for setting cost limits and receiving alerts.

- AWS Trusted Advisor for getting recommendations on cost optimization.

- AWS Cost and Usage Report for detailed cost analysis.

Best Practices for RemoteIoT Batch Jobs

To ensure success with RemoteIoT batch jobs in AWS, it's important to follow best practices that cover design, implementation, optimization, and security. These practices will help you create efficient, scalable, and secure batch processing workflows.

Some key best practices include:

- Plan your batch jobs carefully and define clear objectives.

- Choose the right AWS services for your specific needs.

- Optimize your workflows for performance and cost efficiency.

- Implement robust security measures to protect your data and systems.

Continuous Improvement

Continuously improving your batch jobs is essential for staying competitive and meeting evolving business needs. Regularly review your workflows, gather feedback from stakeholders, and make adjustments as needed to ensure optimal performance and efficiency.

Conclusion and Next Steps

In conclusion, RemoteIoT batch jobs in AWS offer powerful capabilities for processing large-scale IoT data efficiently and cost-effectively. By leveraging the AWS ecosystem and following best practices, you can design, deploy, and optimize batch jobs that meet your business requirements.

We encourage you to take the following next steps:

- Experiment with the examples provided in this article to gain hands-on experience.

- Explore additional AWS services and features to enhance your batch processing workflows.

- Share your experiences and insights with the community to help others learn and grow.